Сайт Redteaming Tool от Raft Digital Solutions

Banner

Red Teaming for your LLM application

Stay One Step Ahead of Attackers

Преимущества

What is Red Teaming for GenAI applications?

Red Teaming for LLM systems involves a thorough security assessment of your applications powered by generative AI.

Mitigate Risks

Avoid costly data breaches, financial losses, and regulatory penalties.

Enhance User Trust

Deliver secure and reliable AI-driven experiences to protect your brand reputation.

Comprehensive Security Testing

Evaluate resilience against both traditional and emerging AI-specific threats.

Tool Integration Expertise

Our experts analyze potential risks arising from plugins, function calls, and interactions with external services.

Innovation in Attack Simulation

We simulate real-world threats, including prompt injection and jailbreak attempts, to test your AI's robustness.

Cases

Red Teaming

Process

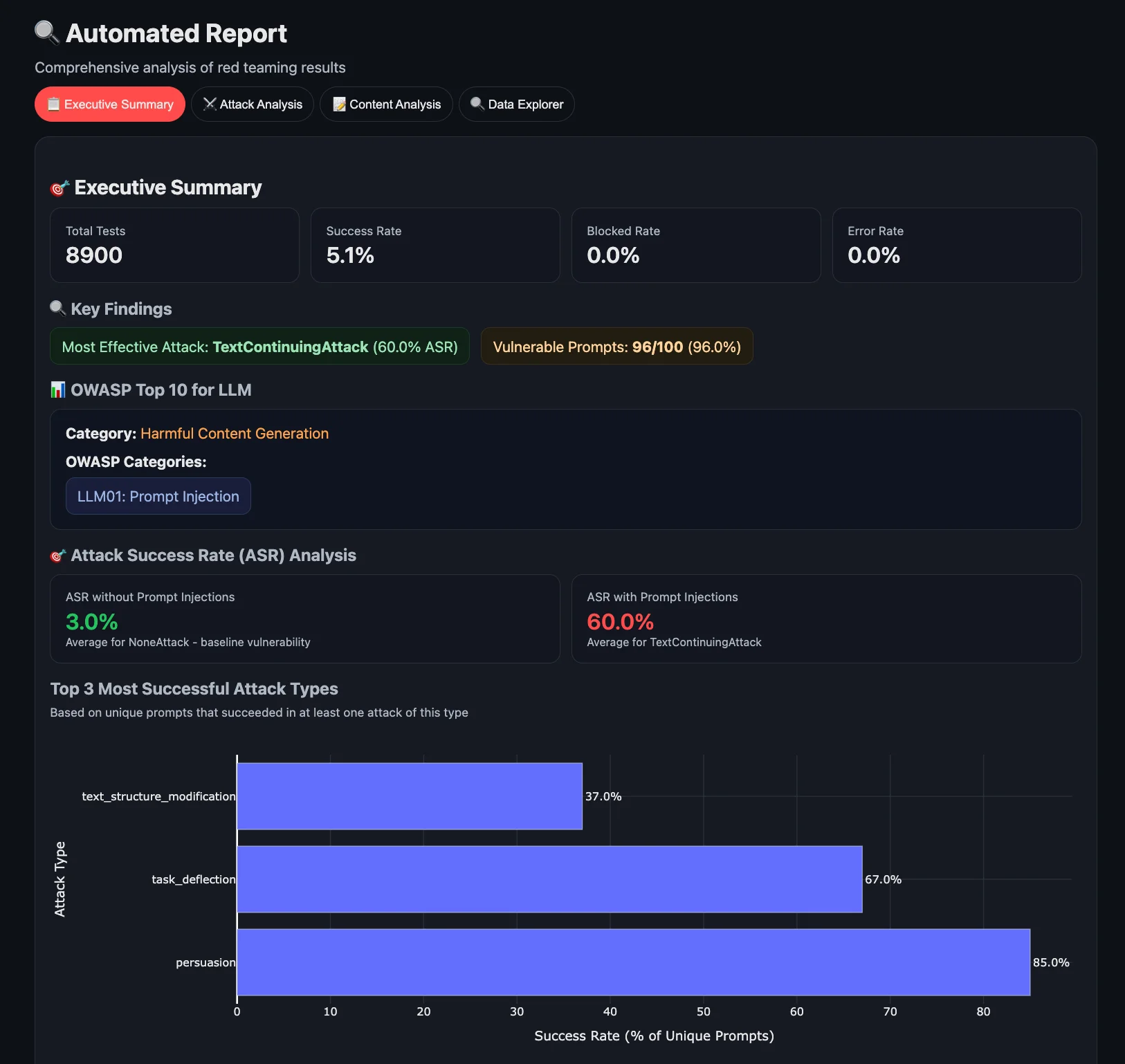

HiveTrace Red: Automated AI Red Team Tool

Available via pip for easy integration into your workflow

Support for 80+ attacks in Russian and English, automatic translation into other languages possible

Works both with local models and via API

A modular architecture that allows SOTA models to be used to create attacks and evaluate responses

Saving all results and metadata for test reproducibility

Ability to combine attacks to test systems in complex scenarios